Even though it’s over ten years old, Kubernetes is still a relatively new paradigm for deploying applications compared to the overall lifespan of software. During that time, it has become one of the leading container solutions with 24% of market share. Today, even more companies have “leveraging the power of containerization” in their IT roadmap. After the Broadcom VMware purchase, many teams are looking to move virtual machines and at the same time, use the opportunity to shift properly into a Kubernetes environment. However, utilizing Kubernetes can cause a struggle for older, legacy applications that weren't built to take full advantage of it.

In part one of this blog series, I’ll explain the issue of using Kubernetes with older applications and the special considerations that may be needed to enable them to work.

What is a legacy application?

If age is just a number, then “legacy” is a just a word! Legacy applications are older software systems that are still in productive use within many organizations due to their critical role in day-to-day operations and their ability to perform specific functions reliably. “Legacy” does not equal old or broken. These applications often hold significant value as they have been extensively customized over the years to meet the unique needs of the business, making them deeply integrated into various processes. Additionally, legacy systems can be cost-effective because they avoid the need for immediate large-scale investments in new technologies.

However, legacy systems do have drawbacks including being built around older development models, practices and paradigms. This can make them challenging to deploy in a modern, containerized environment.

If age is just a number, then “legacy” is a just a word!

What is Kubernetes?

Kubernetes (K8s) is an open-source container orchestration system for automating software deployment, scaling, and management. While it originated at Google in 2015, it is now maintained by a global open-source community. The name comes from ancient Greek meaning “helmsman” or “pilot” and the terminology around it is nautically themed to match. It has quickly become the leading platform for container orchestration, allowing teams to more efficiently manage their containerized applications.

Kubernetes Distribution Platforms:

- IBM RedHat OpenShift: OpenShift provides a robust, secure, and fully integrated environment that supports hybrid and multi-cloud strategies. Unlike other Kubernetes-based platforms, OpenShift includes advanced features such as automated updates, integrated CI/CD pipelines, and extensive security controls out-of-the-box, reducing the need for additional configuration and third-party tools.

- VMware Tanzu: VMware Tanzu is a suite of products and services designed to modernize applications and infrastructure through a streamlined Kubernetes-based platform. Tanzu enables enterprises to run and manage Kubernetes clusters across multiple clouds with ease. Unlike other Kubernetes-based platforms, Tanzu integrates tightly with VMware’s robust virtualization and cloud infrastructure tools, offering seamless interoperability with vSphere, NSX, and other VMware technologies. It provides comprehensive capabilities for application lifecycle management, including automated updates, integrated CI/CD pipelines, and observability tools, all within a unified platform.

- Nutanix NKE/Karbon: Nutanix Karbon, part of the Nutanix Cloud Native suite, integrates seamlessly with Nutanix’s hyperconverged infrastructure, offering a robust, secure, and fully integrated environment. Unlike other Kubernetes-based platforms, Karbon provides deep integration with Nutanix Prism, allowing unified management of both virtual machines and containers. It offers automated updates, built-in monitoring, and comprehensive security features out-of-the-box, reducing the need for additional configuration and third-party tools.

- AKS, EKS, GKE and others: Several other prominent Kubernetes platforms provide alternative robust solutions. Azure Kubernetes Service (AKS) by Microsoft offers an integrated experience with Azure, providing built-in monitoring, security, and scaling capabilities. Amazon Elastic Kubernetes Service (EKS) is Amazon Web Services' (AWS) managed Kubernetes service, known for its deep integration with AWS services, high availability, and scalability. Google Kubernetes Engine (GKE) by Google Cloud is renowned for its ease of use, advanced auto-scaling, and security features, leveraging Google’s expertise in running Kubernetes at scale. Each of these platforms offers unique features and integrations, catering to different enterprise needs and cloud strategies, enabling organizations to efficiently manage their containerized workloads across diverse environments.

Automate Operational Application Tasks:

- Deployment and Changes/Releases: Kubernetes automates the deployment process using features like Deployments and Stateful Sets. These resources define the desired state of the application, and Kubernetes ensures that the actual state matches this desired state. For changes and releases, Kubernetes supports rolling updates, which gradually replace instances of the old version of an application with instances of the new version. This allows for seamless updates with minimal downtime and easy rollback in case of failures.

- Scaling: Kubernetes offers horizontal pod autoscaling, which automatically adjusts the number of pod replicas based on the current load. This ensures that applications can handle varying traffic levels efficiently. Kubernetes monitors metrics such as CPU and memory usage and scales the application up or down to meet demand, ensuring optimal resource utilization and cost efficiency.

- Monitoring: Kubernetes integrates with various monitoring tools to provide comprehensive insights into the health and performance of applications. It uses a built-in metrics server to collect resource usage data, and tools like Prometheus for detailed monitoring and alerting. Kubernetes also supports logging through integrations with systems like Elasticsearch and Fluentd. These monitoring capabilities help in proactive issue detection and resolution, maintaining the reliability and availability of applications.

Kubernetes Building Blocks

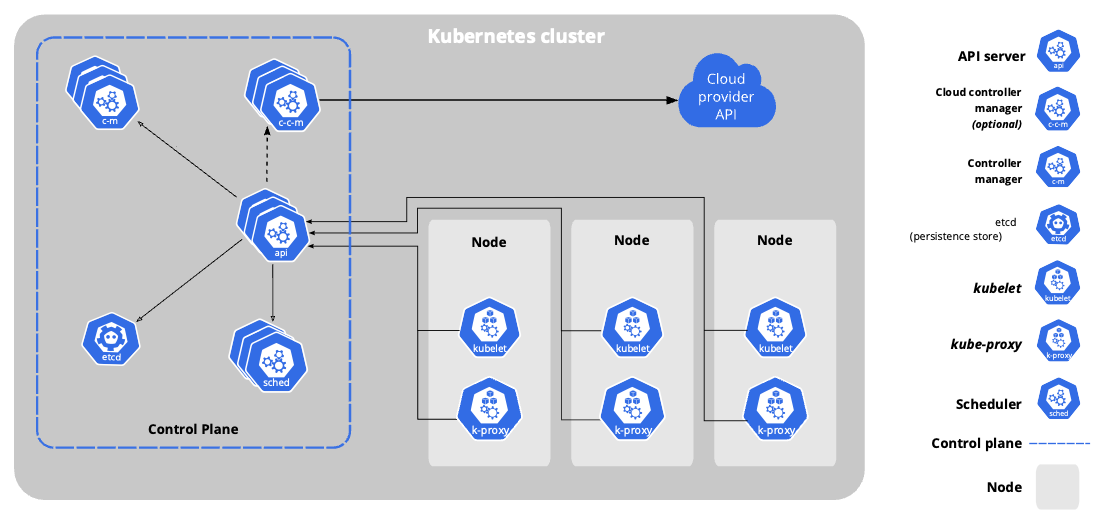

A Kubernetes cluster consists of several key components, each serving a specific purpose in managing containerized applications. No single diagram can encompass all the options, but here is one configuration:

- Nodes: These are the underlying machines (physical or virtual) that run your applications. Each node typically runs a container runtime (like Docker) and an agent called the kubelet, which communicates with the Kubernetes control plane.

- Control Plane: Also known as the master, the control plane is responsible for managing the cluster. It includes several components:

- API Server: Acts as the front end for Kubernetes, accepting and processing requests from users and other components.

- Scheduler: Assigns pods (groups of containers) to nodes based on resource availability and constraints.

- Controller Manager: Monitors the state of the cluster and responds to changes, ensuring that the desired state is maintained.

- etcd: A distributed key-value store that stores the cluster's configuration data, ensuring consistency across the cluster.

- Kubelet: The kubelet manages containers and pods on a Kubernetes node, ensuring they run as expected by receiving and executing PodSpecs. It interacts with the container runtime, monitors container health, and manages networking and storage for pods.

- Kube-proxy: kube-proxy is a network proxy on each node that maintains network rules and enables access to Kubernetes services from within or outside the cluster. It provides load balancing for services with multiple pods and manages network communication between pods and external clients.

Security, Ports, and S-NAT! Oh my!

It’s important to know which kind of K8s you will be running your software on ahead of time because every flavor of K8s has slightly different requirements around their security model. Legacy applications very often use older ports and protocols that are now considered insecure. An older application is inherently going to run into compatibility issues on a modern environment with all of its security functionality.

You’ll need to take into consideration the network stack, how it's handling ingress traffic, whether that traffic is important to handle in that way, what firewall rules need to be updated, what permissions rules need to be updated to enable the application to run. As an example, if an application needs to run as a root user, it will face issues on OpenShift, which wants software to run as a separate user.

Another issue that pops up is around port permissions. In the modern Unix/Linux world, anything below port 1000 is considered a privileged port, and root access or elevated privilege is required to access those ports. Sometimes legacy applications were built before that rule was in place and are designed to leverage ports below Port 1000. Using those will require a special privilege. Which ports are available, and which require special permissions can be different for each type of K8s.

Another fun challenge is S-NAT which stands for “source network address translation.” This means Kubernetes hides or obfuscates the source of the IP to give it the appearance of it's coming from a different location. This is due to how K8 handles networking traffic. This causes issues for software like OpenNMS which needs to tag the original source of the traffic for tracking and analysis purposes.