OpenNMS Architecture

OpenNMS, in some senses, can be considered a legacy application as it has been in the market for almost two decades and utilizes a lot of well-established protocols for monitoring. OpenNMS is designed with a modular architecture that allows components to be added or extended to meet the specific needs of different network environments. It is intended to be highly scalable and flexible, capable of monitoring large, complex networks with ease.

OpenNMS is architected as a scalable and extensible network management platform. Its architecture consists of several key components:

- Data Collection: OpenNMS uses a data collection system to gather information about the network, including device status, performance metrics, and events. This system includes data collectors for SNMP, ICMP, JMX, and other protocols, as well as a polling engine for regularly querying devices.

- Event Management: OpenNMS includes an event management system that processes and correlates events generated by devices on the network. It uses a rules engine to define event processing logic and can generate notifications based on event criteria.

- Topology Discovery: OpenNMS can automatically discover the topology of the network, including devices, interfaces, and connections between devices. This information is used for visualization and monitoring purposes.

- Alarm Management: OpenNMS manages alarms generated by events or threshold violations. It includes features for acknowledging, escalating, and clearing alarms, and for generating notifications to operators.

- Performance Monitoring: OpenNMS provides performance monitoring capabilities, including the collection and storage of performance data over time. It includes features for creating and viewing graphs of performance metrics.

- Web Interface: OpenNMS includes a web-based user interface for monitoring and managing the network. The interface provides dashboards, views, and reports for displaying network status and performance information.

- REST API: OpenNMS exposes a REST API that allows external systems to interact with the platform. This API can be used for automation, integration with other systems, and custom application development.

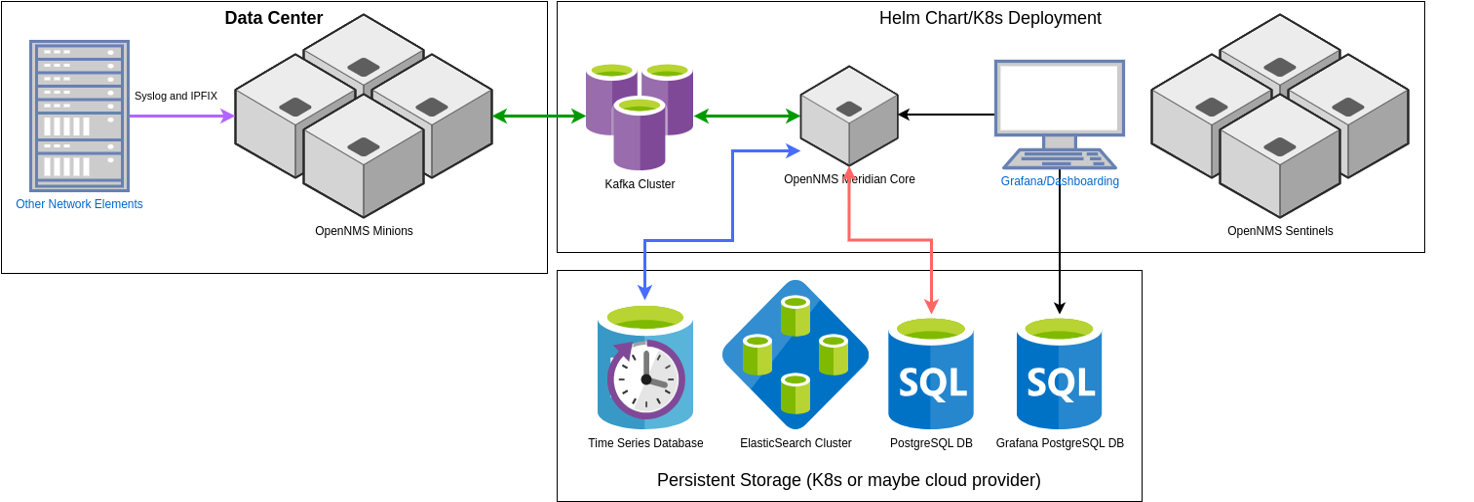

How do you fit OpenNMS into Kubernetes?

1. Start with containers!

Kubernetes containers are lightweight, portable units that package an application and its dependencies, enabling consistent deployment across different environments. They run in isolated environments, ensuring applications do not interfere with each other, and are more efficient than virtual machines, sharing the host OS kernel. This efficiency allows for higher density and faster performance. Containers can be easily moved across environments, making them ideal for CI/CD pipelines. Kubernetes manages the deployment and scaling of these containers, automatically adjusting the number of running instances based on demand and ensuring high availability through self-healing capabilities. This provides a consistent, isolated, and efficient way to deploy and run applications at scale.

2. With a Helm Chart!

Helm itself is a package manager for Kubernetes that helps manage the lifecycle of Kubernetes applications. It simplifies the process of deploying, upgrading, and managing applications on Kubernetes by providing a templating system for defining Kubernetes resources and a command-line interface for interacting with Kubernetes clusters.

A Helm chart is a package format used to define and manage Kubernetes applications. It contains all the Kubernetes resources necessary to run an application, such as deployments, services, ingress rules, and configuration files, packaged into a single unit. Helm charts are written in YAML and can be easily shared and reused, making them a popular way to define and deploy complex applications on Kubernetes.

3. Move the Minion out of Kubernetes

To adjust for the S-NAT issue described above, our recommendation is to move the OpenNMS Mion completely out of K8. You put the minion elsewhere because if it's not within the Kubernetes environment, it does not have to deal with that network address translation. It can just take the source information directly. Minions are fairly lightweight—the idea behind them is that they exist within remote data centers, and you can deploy them anywhere. Having the minion outside flows naturally and resolves that problem.

Don’t Worry! This is a Journey…

- Don't give up on your legacy applications!

You probably can't even if you want to! Legacy applications aren't likely to go away any time soon. It's part of why we call them legacy. They represent technical debt or IT debt that has been accumulated over time. Those applications are typically sticky; you can't just get rid of them. There's often a specific reason for keeping them around like they’re tied to compliance or tied to a specific business strategy. It's the same reason mainframes are still used.

- Be Ready: Kubernetes does not always make things easier

The paradigms that Kubernetes brings into play can make things more complicated. It’s Inherently meant for highly distributed, highly scalable architecture. And if your app is monolithic, not distributable, if your app is not able to scale out rather than up, then inherently you're going to run into issues.

Automation is another challenge. Kubernetes has paradigms for scalability, security and deployments, and automation that delivers and installs those paradigms. When a user deploys an app into Kubernetes, that automation still needs to be written. Somebody has to write it. It's not going to magically write itself.

- Start with containerization

You need to have an app that runs in a container. If you don't have an app that runs in a container, you can't really run it in Kubernetes.

- Persistent storage and database needs

Once you've achieved containerization, you need to figure out your storage needs - whether that's a database or just persistent storage for configuration, you have to figure that out and make it work in Kubernetes. One of the ideas behind Kubernetes is that applications are treated as "cattle not pets.” You have lots of them and they aren’t given the same attention you would a singular application, and the containers within Kubernetes are inherently ephemeral. If it gets knocked over or dies, you just spin up a new one. In trying that with a legacy application, you might not have all the configuration data, so now you need a configuration store somewhere. Or, in databases, you must ensure their databases exist so you can access them without worry of loss of data.

- Re-Architect for scale and resiliency

You don't start off by trying to architect the app; you don't start with the hardest parts. You start with the easy stuff to get it to run in the environment. If you do get to the point of rearchitecting, you will want to develop a more multi-tier application rather than a single tier, monolithic application.

Conclusion

As I said in the beginning, legacy applications often still provide irreplaceable value to organizations. Making them work with modern automated software deployment, scaling, and management solutions is worth the effort. It’s simply a matter of understanding the Kubernetes flavor you’re using, the requirements of the software you’re working with and adjusting to fit. Following the steps above will put you on the path to extending the life of your priceless legacy applications even further.